What is the Model Context Protocol (MCP)? And Why It Matters

7 minutes

Last year, we predicted autonomous AI agents would dominate 2025 and they are. Companies are now racing to create the best autonomous agents like Manus, Google's AI Co-Scientist for research and tools for vibe coding.

The major problem in agent development is simple: AI agents need access to real-world systems to be truly useful. Without it, even the most sophisticated models remain glorified chatbots just talking and taking no actions.

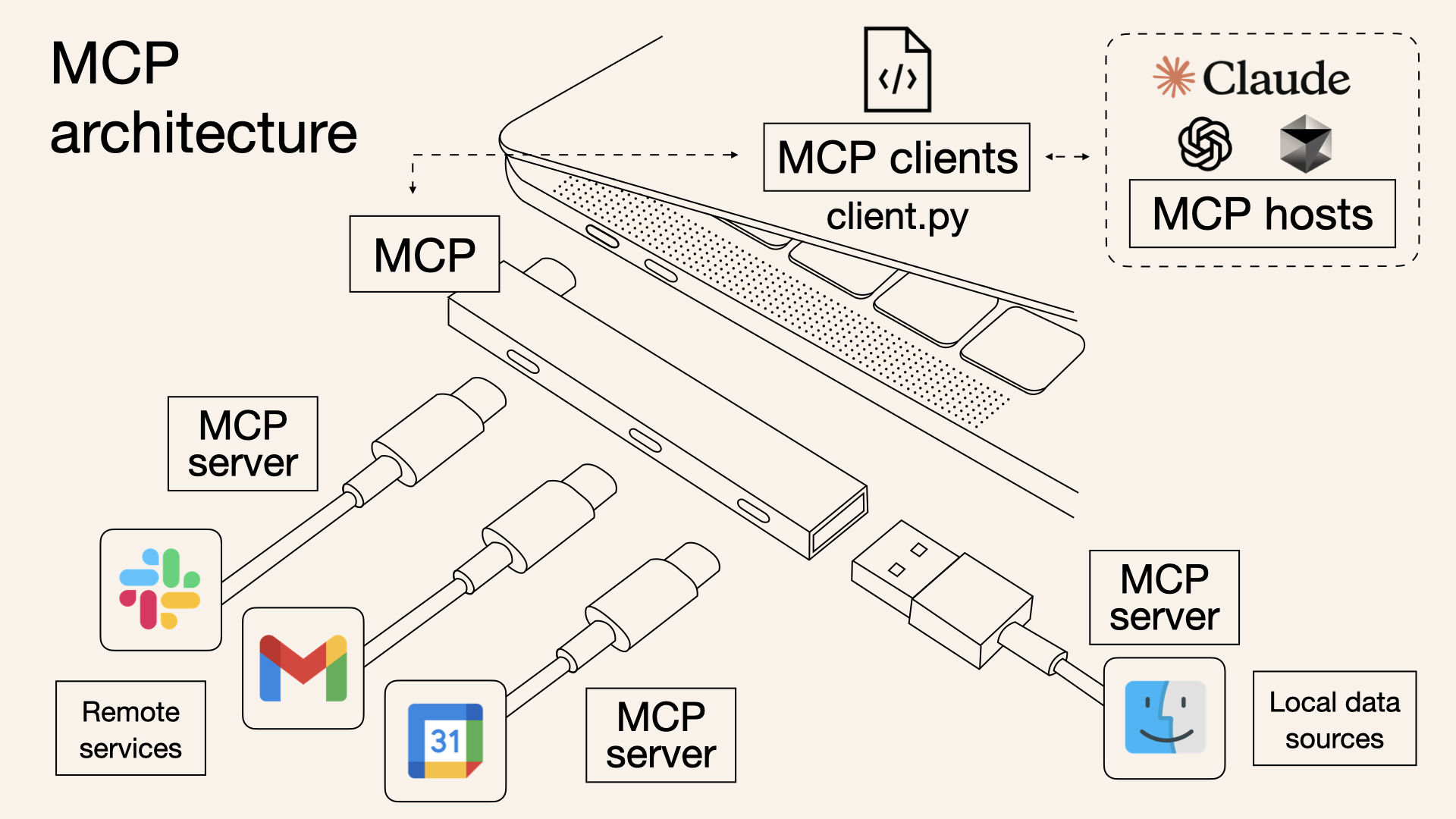

A great illustration of the idea behind the Model Context Protocol (MCP) by Norah Sakal, AI Consultant & Developer. Source: https://norahsakal.com/blog/mcp-vs-api-model-context-protocol-explained/

Anticipating this trend, Anthropic open-sourced the Model Context Protocol (MCP) in November 2024, addressing this need by creating a universal way for AI applications to access contextual information from various data sources.

Before MCP:

Prompt

AI Output

After MCP:

Prompt

AI Output

Slow Start, Rapid Acceleration

Despite its potential, MCP adoption was initially slow due to:

- Limited documentation and examples

- Lack of major platform integration

- Developer focus on other AI trends like model performance

- Unclear technical benefits for business users

The turning point came at the AI Engineer Summit in February 2025. According to Latent Space's swyx, live demos of MCP-powered assistants scheduling meetings, analyzing codebases, and searching databases transformed theoretical possibilities into practical applications overnight.

Related Resource: Listen to the Latent Space podcast featuring Sander Schulhoff, Learn Prompting's CEO, discussing insights from over 1,600 research papers analyzed in The Prompt Report.

By March 2025, four key factors made MCP the go-to standard:

- Major platform support: GitHub, Slack and others added official MCP connections

- Ready-to-use examples: The community built MCP connections for dozens of popular tools through the official registry

- Business demand: Companies wanted AI assistants that could handle complex tasks across systems

- Developer-friendly tools: SDKs in Python, TypeScript, Java, and Kotlin made implementation straightforward

The community response was unprecedented. Within weeks, the Python SDK garnered over 2,200 GitHub stars, while the TypeScript implementation exceeded 1,600, growth rates surpassing many established open-source projects.

Why It Matters

The protocol's beauty lies in its simplicity. Developers can now use pre-built components to connect AI applications to:

- Email systems and messaging platforms

- Document repositories and knowledge bases

- SQL and NoSQL databases

- Code repositories and development environments

- Calendar apps and scheduling systems

- Business tools like CRMs and project management software

This democratization meant that even small teams could build sophisticated AI agents without specialized expertise in each integration.

Now, let's get to the news!

Generative AI Tools Updates

Anthropic Updates: Claude AI Web Search Capability, Claude "Think" Tool

-

Claude AI Web Search Capability: Web search functionality integrated into Claude AI assistant

Key features:

- Accesses current information from across the internet

- Overcomes fixed cutoff date limitation

- Displays sources with citations

- Available in preview to paid US subscribers

-

Claude "Think" Tool: A dedicated space for structured thinking during complex tasks

Key features:

- Allows Claude to pause mid-task to process new information

- Improves performance in complex reasoning tasks

- Enhances policy adherence and sequential decision-making

- Shows significant performance improvements in benchmarks

Google Updates: NotebookLM Interactive Mind Maps, Gemini 2.5 Pro, and Gemini Canvas and Audio Overview

-

NotebookLM Interactive Mind Maps: Visual summaries of uploaded source materials in branching diagram format

Key features:

- Presents main topics and related ideas visually

- Allows zooming, scrolling, expanding/collapsing branches

- Enables direct questioning about specific topics

- Available to all NotebookLM users

-

Gemini 2.5: New AI model designed as a "thinking model" with improved reasoning

Key features:

- Ranks first on LMArena benchmark

- Enhanced performance in reasoning tasks and coding

- 1 million token context window (expanding to 2 million)

- Maintains multimodal capabilities for text, audio, images, video, and code

-

Gemini Canvas and Audio Overview: Two new collaborative features for Gemini

OpenAI API Updates: PDF Support, New Audio Models, and o1-Pro Model

-

PDF Support in API: Direct PDF file support in OpenAI's API

Key features:

- Available for vision-capable models (GPT-4o, GPT-4o-mini, and o1)

- Extracts both text and visual information

- Supports file upload API or direct Base64 encoding

- Integrates with existing Chat Completions endpoint

-

New Audio Models: Updated speech-to-text and text-to-speech models

Key features:

- Improved word error rate compared to previous Whisper models

- Better performance with accents, dialects, and noisy environments

- Instructable text-to-speech with customizable speaking styles

- Integration with Agents SDK for simplified development

-

o1-Pro Model: Advanced reasoning model in the O1 series

Key features:

- 200,000 token context window

- Up to 100,000 maximum output tokens

- Available exclusively through Responses API

- Support for function calling and structured output formatting

Codeium Windsurf Wave 5

Windsurf Wave 5 is the latest update to Codeium's AI-powered code editor

Key features:

- Enhanced Windsurf Tab functionality

- Improvements to latency, quality, and reliability

- Unified contextual awareness across various interfaces

- Available to all users including free accounts

Other News

-

Zoom Advances Small Language Models for Agentic AI: Zoom develops competitive small language models for practical AI applications in a federated approach to enable AI agents that work together.

-

AI2 Releases OLMo 2 32B: Allen Institute for AI introduces a fully open-source language model that outperforms GPT-3.5 and GPT-4o mini while using significantly less computing resources.

-

Google Acquires Wiz for $32 Billion: Google makes its largest acquisition to date, purchasing cloud security leader Wiz to strengthen its position against AWS and Microsoft Azure.

-

Tencent Releases Hunyuan-T1: Tencent unveils the first ultra-large Hybrid-Transformer-Mamba MoE language model designed for advanced reasoning capabilities.

-

Mistral AI Releases Mistral Small 3.1: New Apache 2.0 licensed model sets performance benchmarks with multimodal capabilities and 128k context window.

-

NVIDIA Introduces GR00T N1: NVIDIA releases the first open humanoid robot foundation model alongside simulation frameworks to accelerate development.

-

Stability AI Unveils Stable Virtual Camera: New AI technology transforms 2D images into immersive 3D videos with advanced camera controls without requiring complex reconstruction.

-

xAI Acquires Video Generation Company Hotshot: Video generation startup Hotshot joins Elon Musk's xAI, will sunset current service by March 30.

Curated Gems: Updated Prompt Hacking Section

We've updated the Prompt Hacking section of the Prompt Engineering Guide with new techniques.

Interested in prompt hacking and AI safety? Test your skills on HackAPrompt, the largest AI safety hackathon. You can register here.

It now includes 20 prompt hacking techniques:

- Simple Instruction Attack: Basic commands to override system instructions

- Context Ignoring Attack: Prompting the model to disregard previous context

- Compound Instruction Attack: Multiple instructions combined to bypass defenses

- Special Case Attack: Exploiting model behavior in edge cases

- Few-Shot Attack: Using examples to guide the model toward harmful outputs

- Refusal Suppression: Techniques to bypass the model's refusal mechanisms

- Context Switching Attack: Changing the conversation context to alter model behavior

- Obfuscation/Token Smuggling: Hiding malicious content within seemingly innocent prompts

- Task Deflection Attack: Diverting the model to a different task to bypass guardrails

- Payload Splitting: Breaking harmful content into pieces to avoid detection

- Defined Dictionary Attack: Creating custom definitions to manipulate model understanding

- Indirect Injection: Using third-party content to introduce harmful instructions

- Recursive Injection: Nested attacks that unfold through model processing

- Code Injection: Using code snippets to manipulate model behavior

- Virtualization: Creating simulated environments inside the prompt

- Pretending: Roleplaying scenarios to trick the model

- Alignment Hacking: Exploiting the model's alignment training

- Authorized User: Impersonating system administrators or authorized users

- DAN (Do Anything Now): Popular jailbreak persona to bypass content restrictions

- Bad Chain: Manipulating chain-of-thought reasoning to produce harmful outputs

Check it out!

From Learn Prompting Team: Our AI Security Masterclass Now Features 9 Top Experts

Our 6-week Masterclass on AI Security now features nine AI security specialists who will share their cutting-edge insights and hands-on expertise with you.

Meet your instructors and guest speakers:

- Sander Schulhoff: CEO of Learn Prompting, creator of HackAPrompt, and leader of AI security workshops at Microsoft, OpenAI, Deloitte, Dropbox, and Stanford.

- Jason Haddix: Former CISO at Ubisoft, Head of Security at Bugcrowd, and a top-ranked bug bounty hacker, with extensive experience in penetration testing and AI security.

- Richard Lundeen: Principal Software Engineering Lead at Microsoft's AI Red Team, developing PyRit, a foundational AI security framework.

- Sandy Dunn: Cybersecurity leader with over 20 years of experience, project lead for the OWASP Top 10 Risks for LLM Applications, and an adjunct professor in cybersecurity.

- Joseph Thacker: Principal AI Engineer at AppOmni, top AI security researcher, and winner of Google Bard's LLM bug bounty competition.

- Donato Capitella: Offensive security expert and AI researcher with over 300,000 YouTube learners, teaching how to build and break AI systems.

- Akshat Parikh: Elite bug bounty hacker, ranked in the top 21 in JP Morgan's Bug Bounty Hall of Fame, and AI security researcher backed by OpenAI, Microsoft, and DeepMind researchers.

- Pliny the Prompter: Well-known AI jailbreaker, specializing in bypassing major AI model defenses.

- Johann Rehberger: Former Microsoft Azure Red Team leader, known for pioneering techniques like ASCII Smuggling and AI-powered C2 attacks.

Thanks for reading this week's newsletter!

If you enjoyed these insights about AI developments and would like to stay updated, you can subscribe below to get the latest news delivered straight to your inbox.

See you next week!

Valeriia Kuka

Valeriia Kuka, Head of Content at Learn Prompting, is passionate about making AI and ML accessible. Valeriia previously grew a 60K+ follower AI-focused social media account, earning reposts from Stanford NLP, Amazon Research, Hugging Face, and AI researchers. She has also worked with AI/ML newsletters and global communities with 100K+ members and authored clear and concise explainers and historical articles.