Learn from the Creator of the World's 1st AI Red Teaming Competition

Sander Schulhoff created HackAPrompt, the world's first and largest AI Red Teaming competition, which helped make OpenAI's models 46% more resistant to prompt injections. His work has been cited by OpenAI, DeepMind, Anthropic, IBM, and Microsoft. He won Best Paper at EMNLP 2023 (selected from 20,000+ submissions) and has led AI (selected from 20,000+ submissions) and has led AI security workshops at OpenAI, Microsoft, Stanford, and Deloitte.

Led AI Security & Prompting Workshops At

Your AI Systems Are Vulnerable... Learn how to Secure Them!

Prompt injections are the #1 security vulnerability in LLMs today. They enable attackers to steal sensitive data, hijack AI agents, bypass safety guardrails, and manipulate AI outputs for malicious purposes. In 2023, over 10,000 hackers competed in HackAPrompt to exploit these vulnerabilities, generating the largest dataset of prompt injection attacks ever collected. Every major AI lab now uses this data to secure their models. Without proper defenses, your AI systems remain exposed to these critical threats.

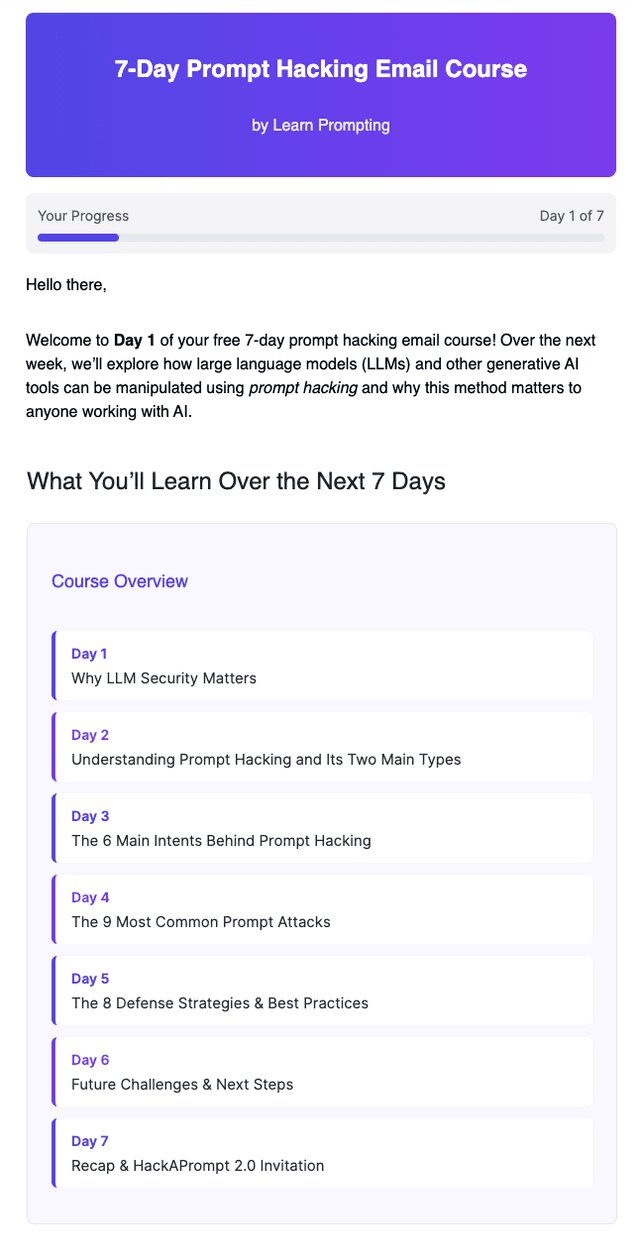

About the Course

This hands-on masterclass teaches you to identify and fix vulnerabilities in AI systems before attackers exploit them. Using the HackAPrompt playground, you'll practice both attacking and defending real AI systems through practical exercises, not just theory. You'll learn to detect prompt injections, jailbreaks, and adversarial attacks while building robust defenses against them.

The course prepares you for the AI Red Teaming Professional Certification (AIRTP+) exam, a rigorous 24+ hour assessment that validates your expertise. We've trained thousands of professionals from Microsoft, Google, Capital One, IBM, and Walmart who now secure AI systems worldwide. Join them in becoming AIRTP+ certified and protecting the AI systems your organization depends on.

Transform Your Career in AI Security

Master Advanced AI Red-Teaming Techniques

Break into any LLM using prompt injections, jailbreaking, and advanced exploitation techniques. Learn to identify and exploit AI vulnerabilities at a professional level.

Execute Real-World Security Assessments

Role-play test enterprise chatbots for data leaks, verify AI image generators against harmful content, and perform advanced prompt injection attacks on production systems.

Command Premium Salaries

Qualify for roles like AI Security Specialist ($150K-$200K), AI Red Team Lead ($180K-$220K), and AI Safety Engineer ($160K-$210K).

Build Robust AI Defenses

Implement security measures throughout the AI development lifecycle. Learn to secure AI/ML systems by building resilient models and integrating defense strategies.

Join an Elite Network

Connect with professionals from Microsoft, Google, Capital One, IBM, ServiceNow, and Walmart. Expand your network and collaborate with industry leaders.

Earn Industry Recognition

Get AIRTP+ Certified - the most recognized AI security credential. Validate your expertise and position yourself as a leader in AI security.

Learn from the World's Top AI Security Experts

Joseph Thacker

Solo Founder & Top Bug Bounty Hunter

As a solo founder and security researcher, Joseph has submitted over 1,000 vulnerabilities across HackerOne and Bugcrowd.

Valen Tagliabue

Winner of HackAPrompt 1.0

An AI researcher specializing in NLP and cognitive science. Part of the winning team in HackAPrompt 1.0.

David Williams-King

AI Researcher under Turing Prize Recipient

Research scientist at MILA, researching under Turing Prize winning Yoshua Bengio on his Safe AI For Humanity team.

Leonard Tang

Founder and CEO of Haize Labs

NYC-based AI safety startup providing cutting-edge evaluation tools to leading companies like OpenAI and Anthropic.

Richard Lundeen

Microsoft's AI Red Team Lead

Principal Software Engineering Lead for Microsoft's AI Red Team and maintainer of Microsoft PyRit.

Johann Rehberger

Founded a Red Team at Microsoft

Built Uber's Red Team and discovered attack vectors like ASCII Smuggling. Found Bug Bounties in every major Gen AI model.

Is This Masterclass Right for You?

Perfect for:

Everything You Need to Succeed

In-depth training from industry leaders

With hands-on labs and exercises

Hack production AI systems

Network with AI security professionals

Review materials anytime

Industry-recognized credential