AI That Learns by Watching You?

7 minutes

Hey there!

Welcome to the latest edition of the Learn Prompting newsletter.

We're heading into the era of Agentic AI, which is finally starting to take shape instead of being more of an abstract idea. Anthropic's Computer Use led the charge with their AI that can interact with your computer, and gave us a real look at what the future of AI agents could be.

So, how do we get these agents to work like we do? There's a pretty clear pattern here: companies are starting with tools that watch how you work, learn from it, and then mimic your behavior. These tools pick up on the way you handle basic tasks and learn to replicate them. That data is probably being used to train the first generation of AI agents, setting the stage for versions that are smarter, more accurate, and capable of tackling more complicated workflows.

Latest Examples of AI Learning from Human Behavior

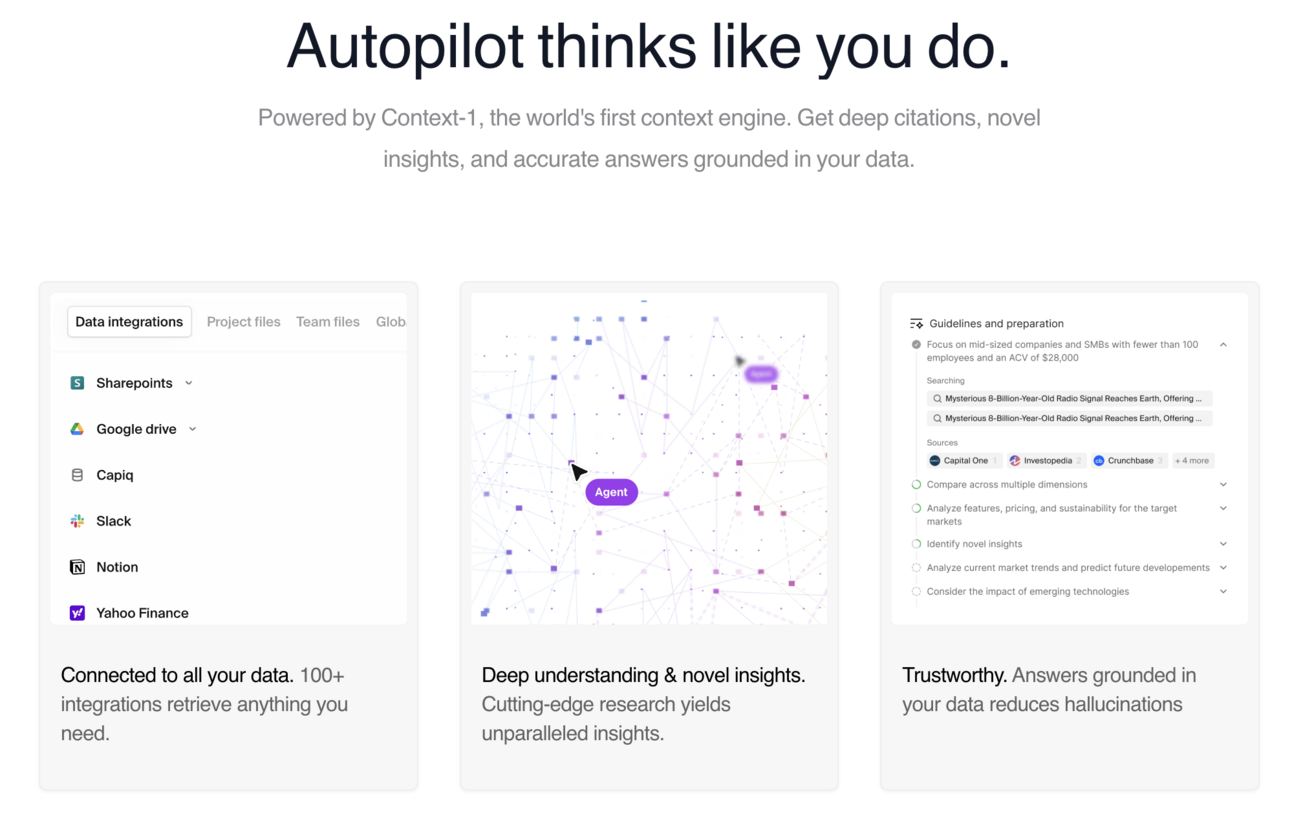

Context's Autopilot: An AI That Works the Way You Do

Context has launched Autopilot, an AI tool designed to make your work easier by learning how you work. Here's how it works:

- Observes and Learns: Autopilot watches how you handle tasks like writing emails, organizing files, or solving problems.

- Adapts to Your Style: Over time, it understands your preferences and adjusts to how you like to work.

- Uses Familiar Tools: It works with tools you already use, like Google Drive, Slack, or SharePoint.

- Handles Tasks for You: Autopilot can create documents, reply to emails, or analyze data—saving you time and effort.

ChatGPT's Mac Desktop App: AI That "Sees" What You See

The macOS version of ChatGPT is evolving to become a more hands-on helper. Right now, it's in beta, but it already has some exciting features:

- Desktop Awareness: ChatGPT can "see" your desktop and interact directly with open apps.

- Works With Specific Tools: It integrates with coding tools like VS Code and Xcode, terminal apps like Terminal and iTerm, and even basic text editors.

- Observes and Assists: For now, it provides suggestions and answers based on what you're working on.

Codeium's Windsurf Editor: Coding Suggestions, Before You Ask

Codeium's Windsurf Editor is an AI-powered coding tool designed to make programming more efficient by learning from how you work. Here's what it offers:

- Learns Your Workflow: It analyzes your past actions to predict what you'll do next, making suggestions or automating repetitive tasks.

- Understands Complex Codebases: Windsurf Editor can study relationships between files, group relevant components, and help you navigate large projects.

- Works Without Micromanagement: It doesn't need constant input—it proactively performs tasks and supports your workflow, acting like an intelligent assistant.

Other GenAI Market Updates

- Perplexity Shopping: Shop like a pro with "Snap to Shop." "Buy with Pro" offers free shipping and handles checkout. Shopify integration is in the works.

- Replit Agent: Turn your ideas into apps—share a concept, and it writes and deploys the code. Examples include health dashboards and campus parking maps.

- ElevenLabs Projects: Advanced tools for text-to-audio creation with multi-voice customization, bulk adjustments, and faster playback.

- Google Learn About: A conversational learning tool offering quick articles, sources, and image-based prompts to explore new topics.

- Google Workspace AI Generator: Create visuals directly in Google Docs using Gemini-powered AI.

AI for Coders

We've just released a step-by-step guide on how to effectively use generative AI chatbots for coding. It's packed with actionable tips to help you automate routine tasks.

What's inside:

- GenAI Tools for Code

- Automated Code Generation

- AI-Assisted Code Completion

- Debugging with AI

- Code Optimization and Refactoring

- Writing Automated Tests

- Documentation of Your Code with AI

- Understanding Code

- Learning New Programming Languages or Frameworks

- Advanced: AI Agents for Coding

- Ethical Considerations

New Tools for Developers

- Gemini AI models are now accessible via the OpenAI Library and REST API

- CopilotKit & LangGraph introduced CoAgents for integrating LangGraph agents into React apps

- Microsoft open-sourced TinyTroupe, a Python library for simulating personas

The Latest In Prompting: Is Prompt Engineering Dead?

Two new studies researched the impact of prompt formatting and prompting techniques on the performance of large language models (LLMs).

Here's what they found:

- Prompt Structure Matters: Changing the format of a prompt can affect performance by up to 40%.

- Model Preferences Are Real: Experimenting with formats that match a specific model's strengths can lead to better results.

- Small Changes, Big Impact: Minor tweaks in phrasing or formatting can produce drastically different answers.

- Use Examples for Better Results: Adding few-shot prompting examples can make the AI more reliable.

- Larger Models Handle Prompts Better: Models like Llama3-70B are more adaptable to different prompt styles.

Tasks where prompting made the biggest difference

In tasks requiring creativity or complex reasoning, prompt design had the greatest impact:

- Creative Tasks: Writing scripts, stories, or creating visualizations

- Data Visualization: Generating descriptive or graphical summaries of data

- Algorithmic and Computational Problem Solving: Complex programming tasks

Tasks where AI models were most consistent

For straightforward, knowledge-based tasks, AI models performed consistently:

- IT Problem Solving

- Business Solutions

- Multilingual Programming and Debugging

What this means for you

Prompt engineering isn't dead—it's evolving. To get the most out of LLMs:

- Experiment with formats to match the model's preferences

- Use few-shot examples to guide the model and improve reliability

- Opt for larger models for complex or creative tasks

Read our thoughts on that topic.

Resources from Learn Prompting

Dario Amodei recently highlighted an important reality: as AI models become more powerful, their potential for harm grows alongside their benefits. Just as English is becoming the "hottest new coding language", it's also becoming the tool people use to exploit AI.

Here's the surprising part—many of the winners from HackAPrompt 1.0 had no traditional tech background! Developers struggle to predict vulnerabilities and enforce safeguards. The solution is AI Red Teams—experts who identify and fix weaknesses before they can be exploited.

Here are the two resources you can use to get started in Red Teaming:

HackAPrompt 2.0 with up to $100,000 in prizes

We're thrilled to announce HackAPrompt 2.0 with up to $100,000 in prizes! This competition brings together people from all backgrounds to tackle one of the most important challenges of our time: making AI safer for everyone.

AI systems are becoming integrated into every part of our daily lives, but they're not infallible. Vulnerabilities in these systems can lead to unintended harms, such as bypassing safety guardrails or eliciting dangerous outputs. HackAPrompt 2.0 invites participants to AI Red Team these cutting-edge models, test their limits, and expose weaknesses across five unique tracks:

- Classic Jailbreaking: Breaking AI models to elicit unintended responses

- Agentic Security: Testing AI systems embedded in decision-making processes

- Attacks of the Future: Exploring hypothetical threats we haven't seen yet

- Two Secret Tracks: To be revealed closer to the competition!

We'll announce more details in the following weeks. Join the waitlist to be notified when we launch!

Live Course on Red Teaming

We're teaching a course on AI Red Teaming, led by the CEO of Learn Prompting, Sander Schulhoff, who created HackAPrompt!

- CEO of Learn Prompting, the 1st Prompt Engineering guide on the internet

- Award-winning, Deep Reinforcement Learning Researcher, who's co-authored papers with OpenAI, ScaleAI, HuggingFace, Microsoft, The Federal Reserve, & Google

- His post-competition paper on HackAPrompt was awarded Best Theme Paper at EMNLP 2023 out of 20,000 submitted papers

Alongside Sander Schulhoff, notable guest speakers include:

- Pliny the Prompter: The most renowned AI Jailbreaker, who has successfully jailbroken every AI model released to date

- Akshat Parikh: Former AI security researcher at a startup backed by OpenAI and DeepMind researchers

- Joseph Thacker: Principal AI Engineer & security researcher specializing in application security and AI

- More announced soon!

You'll learn alongside other students in our cohort including:

- CTOs, CISOs, Chief Security Architects, Principal Security Engineers, Technical Leads, Site Reliability Engineers, PhD Researchers

- Core contributors to the OWASP® Foundation Top 10 LLM Risks

- Members of the National Institute of Standards and Technology (NIST) AI Safety Consortium

- Government leaders shaping AI security policy

- Cybersecurity professionals transitioning into AI Red Teaming careers

The course is $900 now but will increase to $1,200 on December 1st, and it starts in just 15 days, on December 5th.

If you have a Learning & Development budget to use before the year ends, this course is a fantastic way to invest in your skills—and it's reimbursable by many companies.

Thanks for reading this week's newsletter!

If you enjoyed these insights about AI developments and would like to stay updated, you can subscribe below to get the latest news delivered straight to your inbox.

See you next week!

Valeriia Kuka

Valeriia Kuka, Head of Content at Learn Prompting, is passionate about making AI and ML accessible. Valeriia previously grew a 60K+ follower AI-focused social media account, earning reposts from Stanford NLP, Amazon Research, Hugging Face, and AI researchers. She has also worked with AI/ML newsletters and global communities with 100K+ members and authored clear and concise explainers and historical articles.